|

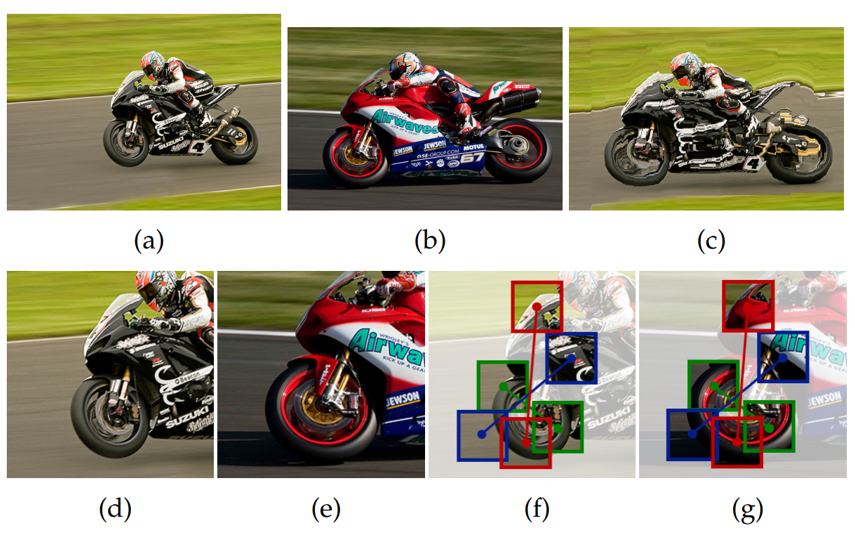

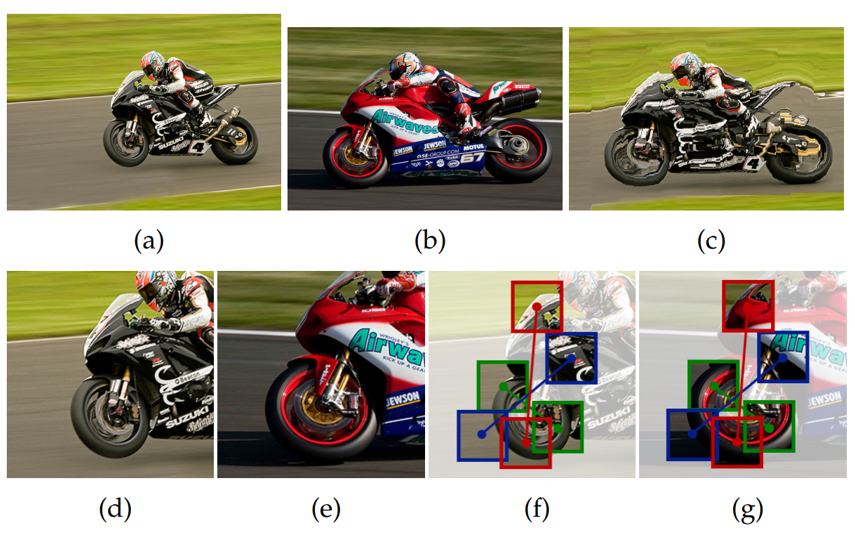

Visualization of our FCSS results: (a) source image, (b) target image, (c) warped source image using dense correspondences, (d), (e) enlarged windows for source and target images, (f), (g) local self- similarities computed by our FCSS descriptor between source and tar- get images. Even though there are significant differences in appearance among different instances within the same object category in (a) and (b), their local self-similarities computed by our FCSS descriptor are preserved as shown in (f) and (g), providing robustness to intra-class appearance and shape variations.

|